Thank you! Your submission has been received! 📨

Oops! Something went wrong while submitting the form.

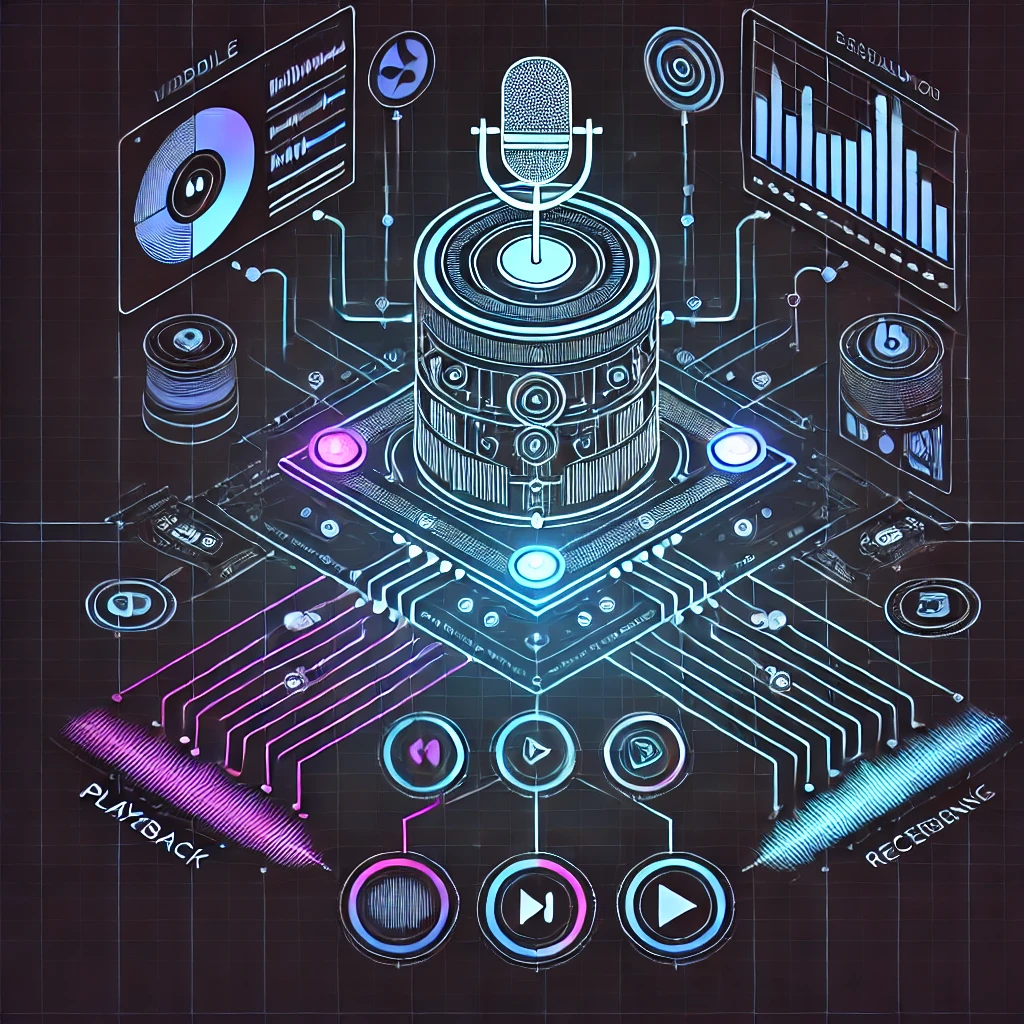

We are entering an era where interaction with technology is increasingly happening through voice. Intelligent voice agents embedded in applications promise a revolution—from booking a restaurant table to navigating complex business processes, all through a natural, fluid conversation. However, beneath the surface of advanced AI models lies a technical layer that determines whether the interaction will be magical or frustrating. That layer is audio session management.

Our experiences, which we described in the article "From Chaos to Harmony," taught us that audio problems in "regular" mobile apps are just the tip of the iceberg. In the world of voice agents, these same challenges escalate, becoming critical barriers to functionality.

Imagine the audio session as an application's nervous system, managing everything related to sound: the microphone, speakers, priorities, and formats. In a simple app that plays music, this system has an easy job. However, a voice agent is a complex organism that must simultaneously:

When this system fails, the entire illusion of a natural conversation shatters.

The challenges we identified while building our custom module manifest with multiplied force in the context of a voice agent.

In our previous project, a key issue was the

slow loading of sounds by the expo-av library. In the case of playing background music, a few seconds of delay is annoying. In a conversation with a voice agent, the same delay before it responds makes the interface useless. The user doesn't know if the system has frozen or if it didn't understand them. A fluid conversation requires reactions in milliseconds, which cannot be achieved without full control over audio buffering and playback.

We determined that popular audio libraries act as "separate entities", fighting for control over the audio session. In a voice agent, this battle is catastrophic. Imagine a scenario where the text-to-speech module (speaking) "locks" the audio session in a way that the speech recognition module (listening) cannot immediately take over. The result? The user tries to interrupt the agent, but their command isn't registered. This is a fundamental design flaw that destroys the agent's usability.

The problem of unbalanced volume levels in the self-tape application was a nuisance. In a voice agent, it's a communication barrier. If the agent responds too loudly, it's uncomfortable. If it's too quiet, the user won't hear the response. Worse, if the sound from the speakers (the agent's speech) is too loud and "leaks" into the microphone, the agent might hear itself, leading to errors in recognizing the user's speech. Precise management of volume and microphone sensitivity is absolutely critical here.

Experience has taught us that you cannot build a reliable voice agent by gluing together several off-the-shelf, "black-box" audio libraries. Such an architecture is destined to fail due to conflicts and a lack of control.

Creating a solid foundation for a voice agent requires the same approach we took in our project—building a custom, centralized module for audio session management. Only such a solution provides the granular control necessary to orchestrate complex interactions like seamless transitions between listening and speaking, handling interruptions, and perfectly balancing audio levels.

The quality of an AI agent doesn't just lie in its cloud-based "brain." It starts much lower—in the native, on-device code that makes its voice reliable, clear, and ready for conversation.